Reverb is arguably one of the most often-used effects in modern recording, and probably one of the most misunderstood. It’s interesting to consider the fact that, as with so many things, we’ve spent decades perfecting different ways to imitate something that occurs on its own in nature.

This month we’ll take a look at one of modern recording’s favorite effects – how it has evolved, its use and its misuse. Let’s start with a little bit of history.

Early Reflections

In the earliest recordings, the only reverb was what occurred naturally in the recording environment. The sound of the room itself was picked up by the microphone (and in most cases it was just that – one microphone), and rooms with great sonic characteristics (mainly theaters, symphony halls and the like) were sought after as recording environments. This worked fine for the recordings of the day, which were mainly of the orchestral and operatic genres.

In the earliest recordings, the only reverb was what occurred naturally in the recording environment. The sound of the room itself was picked up by the microphone (and in most cases it was just that – one microphone), and rooms with great sonic characteristics (mainly theaters, symphony halls and the like) were sought after as recording environments. This worked fine for the recordings of the day, which were mainly of the orchestral and operatic genres.

In the post-WW2 Big Band era of the late 1940s and early 1950s, radio began to play an increasingly important role in how audiences consumed recorded music. Improvements in microphone technology and the advent of audio tape made it possible for recording engineers of the day to experiment with mic placement, increasing consciousness about reverb, if not necessarily options. One of the first documented uses of natural (ambient) reverb to intentionally enhance a recording was by engineer Robert Fine, who introduced ambient mics on some of the early “Living Presence” recordings on Mercury Records.

|

| The first use of artificial reverb. |

It was none other than Bill Putnam, Sr., founder of Universal Audio, who pioneered the use of artificial reverb in recordings in 1947. Putnam converted his studio’s bathroom to create one of the first purpose-built echo chambers, placing a speaker in one corner and a microphone in another, and mixing the sound with a live recording. The unique sound of his Universal Records label’s first recording, “Peg o’ My Heart” by The Harmonicats, was a runaway hit, and Putnam went on to design reverb chambers for his studios in Chicago and Los Angeles. Other studios followed suit (including the still-active chambers under the Capitol Records building in L.A.), and the sound of echo chambers dominated the recordings of the 1950s.

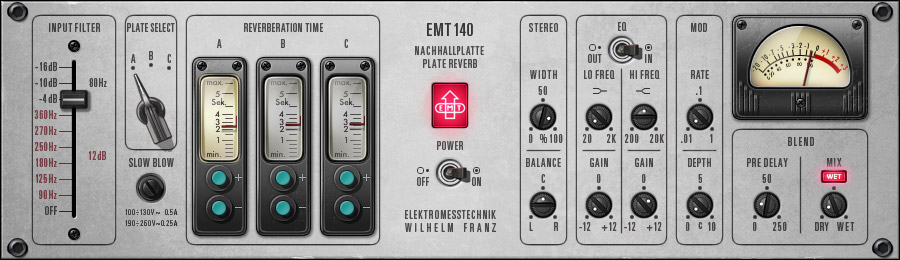

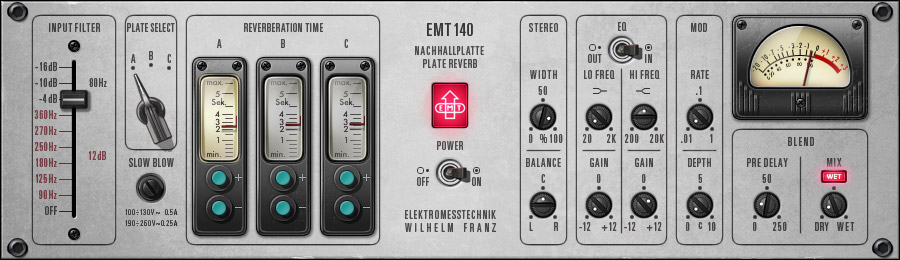

As groundbreaking as Putnam’s echo chamber concept was, it still utilized the natural ambience and reverb of a real space. It wasn’t until 1957 that the German company Elektro-Mess-Technik (EMT) unveiled their EMT 140, the first plate reverb. The famed EMT 140 (and subsequent units) worked by attaching a small transducer (loudspeaker) to the center of a thin sheet metal plate; vibrations from the speaker were sent across the surface of the plate, and were picked up by one or more small pickups attached to the edge of the plate. The result was a dense, warm sound that emulated a natural room echo but was uniquely its own. And while the EMT plate reverbs were large and unwieldy, they were still a cheaper and more versatile alternative to building a dedicated echo chamber.

Another technology that emerged during the 1950s was spring reverb. Essentially, a spring reverb works in much the same way as a plate, but substitutes springs for the metal plate. Because springs take up far less space, spring reverbs became popular in applications where plate reverbs were impractical, including early guitar amps (Fender’s being the most well-known) and Hammond organs.

|

| Lexicon 224: The quintessential '80s reverb. |

The advent of digital technology in the late 1970s and early 1980s changed the face of most things audio-related, including reverb. Digital reverbs made it possible to create “programs” that emulated the natural ambience of any space, as well as the sound of plate, spring and other electronic reverb sources. In almost no time at all, a veritable flood of digital reverb and multi-effects boxes appeared on the market. Some of the most popular units included the

EMT 250 and Lexicon’s 224 and 480, and Yamaha’s Rev7 and SPX90. Now it was even possible to modify the parameters of those programs to create effects that don’t occur naturally, including artificially altering early reflections (the first reflected sound), pre-delay (the time before the first reflected sound is heard), and even reverse and gated reverb (probably one of the most overused snare effects of the 1980s).

Less is More

In the early days of recording, the only reverb on a record was that of the room the recording took place in. Studios were prized for their natural ambience. As multitracking evolved, studios were designed to be fairly “dead” and mics were placed close to each instrument to capture as much direct sound as possible, with minimal reflections from the room. A single reverb device (usually a plate or chamber) was then used to create an artificial “room” ambience.

In today’s DAW-oriented world, signal processing is cheap and plentiful. Even entry-level recording programs offer a multitude of reverbs, and today’s recordings typically employ one or more reverbs on each instrument. Now the challenge is no longer which reverb to use, but what combination of reverbs works to create a cohesive and natural sound.

Not surprisingly, it’s easy to overdo it. In fact, excessive or poorly used reverb is one of the most common mistakes inexperienced recordists make. An instrument’s direct sound is important in establishing directionality and clarity. Add too much reverb and your mix can easily become a lush pool of mush. One general guideline to consider is that, unless you’re intentionally after a special effect, the best use of reverb is typically when it’s almost imperceptible within your mix.

Anatomy of a Reverb

At first look, many of the parameters of reverb units can be pretty confusing. We can simplify things by breaking it down to basic physics.

Like throwing a stone into a pool of water, sound emanates from the source in waves. Those waves eventually hit multiple surfaces (walls, ceiling, floor, seating, whatever) and echo back, mixing with the original sound. The way we hear that sound depends on several factors — how far away those various reflective surfaces are, what they’re made of, where our ears are located in relation to the original and reflected sound waves, and even other subtle factors like temperature, humidity, altitude and more. In most cases, what we hear is the product of thousands of echoes, reflected many times.

Our brains decode this information in various ways. The first echoes that occur when sound waves hit surfaces (early reflections) and the amount of time between the initial sound and those first reflections (pre-delay) work together to tell us how large the space is, and what our position is within the space.

The length of time until the echoes die away (decay) also helps determine the size of the space, but the way that decay interacts with the early reflections also makes a difference. For example, a small but reflective room (e.g., a tiled bathroom) can have a decay time similar to a larger hall, but the smaller room’s early reflections will arrive sooner.

|

| Old School: a custom "Men's Room" echo chamber. |

The tonal color of the reflections also plays a critical role. The reverb in that tiled bathroom will be considerably brighter sounding than a larger room with wood or fabric-covered walls. Larger halls will also attenuate different frequency ranges at different rates, and the combination of which ranges last longer also affects our perception of the space.

Other factors also affect our perception, including density (how tightly packed the individual reflections are) and diffusion (the rate at which the reflections increase in density following the original sound). A large room with parallel walls will usually have a lower diffusion rate than a similarly sized room with non-parallel or irregularly shaped walls.

As you can imagine, creating a natural sounding ambience is a complex, multi-faceted process that involves programming dozens of interdependent parameters. For the most part, it’s best to find a reverb program that comes close to what you’re looking for, and keep the tweaking to a minimum.

What Works Where

As with most effects, there are no hard and fast rules, other than the age-old adage “trust your ears.” But here are a few general guidelines to start with.

As stated earlier, less is more. You’ll achieve more natural sounding results using few reverbs, rather than several. One short, bright program (small room or plate) and a larger, warmer program (large room or hall) will often be enough to cover most of your mix. For best results, insert reverbs into an effect or aux buss, rather than directly into a signal chain. This will enable you to use the same reverb for multiple tracks, while varying the amount of send for each source.

|

| The EMT 140 Plate Reverb plug-in for the UAD platform. |

Drums and other percussive sounds typically sound more realistic with small to mid-sized rooms (shorter reverb tails, shorter pre-delay), or plate programs. A longer pre-delay can create the impression of a “phantom” doubled attack, while a longer reverb decay can affect directionality and clarity. Too much high-frequency content can create a harsh, brittle sound, particularly on snare drums. Lower density settings can also sound coarse and unnatural on drums. Higher densities and warmer reverbs will generally deliver better results.

Acoustic instruments like strings, woodwinds and some vocals can benefit from larger room and hall settings and longer pre-delay times, which can help smooth and add depth. Those larger spaces can also be useful in widening a stereo image. Overused, a large room sound can “blur” an instrument’s attack and create a “swimmy” sounding mix that lacks definition and directionality.

One trick for helping to define, rather than blur, the imaging in your mix, is to use reverb in combination with delay. Pan the original sound slightly to one side. Delay the reverb return slightly (try anywhere from 3 to 10 ms) and pan it to the opposite side. This works particularly well to help separate sounds in similar tonal ranges, like multiple stacked guitar tracks.

|

| The UAD-2 Powered Plug-In emulation of the classic EMT 250 Electronic Reverb unit. |

Vocals can be particularly susceptible to losing definition with larger room settings. Especially with shorter pre-delay times, the reverb can “step on” the vocal, robbing intelligibility. Using a longer pre-delay before the actual reverb kicks in allows the vocal’s clarity and impact to cut through, but gives it a natural “tail” that rings out without blurring. Background vocals are somewhat less critical in this respect, and can often benefit from a larger room setting, which can smooth and blend multiple parts.

Be Creative

We’ve spent most of this column talking about the best ways to use reverb naturally. And for the most part, that’s a good idea. In fact, in most instances, the best use of reverb is to create a mix where its use is pretty much indiscernible.

But as with most effects, experimentation can lead to some great surprises, so don’t be afraid to bend the rules. Try combining a couple of different instances of the same reverb with slightly different parameters and panning them left and right. Or try adding a subtle chorus or distortion to a reverb. Again, subtlety is key here – a little bit of something unusual, buried deeply in the mix, might be just the thing to give your mix that special “something.”

Where do you aim the speakers to give you the smoothest and most consistent sound, and how far apart do you place them to give you a good stereo image? The basic rule is to follow the layout of an equilateral triangle, which is a triangle with all three legs the same length. The distance between the two monitors should be roughly the same as the distance between one monitor and your nose in the listening position where you are leaning forward on the console armrest. The speaker axis should be aimed at the half-way point between your furthest forward and the furthest rearward listening positions. This is typically a range of about 24" (600mm). If you can, you also want to try to get your ears lined up with the vertical speaker axis (half way between the woofer and the normal listening position lined up in the best spot possible. If this would have you resting your chin on the console or desktop, you could tilt the monitor back slightly. This keeps your head in the sweet spot whether you're leaning forward adjusting level or EQ, or leaning back and listening to the mix. Don't go crazy trying to get this exact to three decimal places, within an inch or two gets you into the game.

Where do you aim the speakers to give you the smoothest and most consistent sound, and how far apart do you place them to give you a good stereo image? The basic rule is to follow the layout of an equilateral triangle, which is a triangle with all three legs the same length. The distance between the two monitors should be roughly the same as the distance between one monitor and your nose in the listening position where you are leaning forward on the console armrest. The speaker axis should be aimed at the half-way point between your furthest forward and the furthest rearward listening positions. This is typically a range of about 24" (600mm). If you can, you also want to try to get your ears lined up with the vertical speaker axis (half way between the woofer and the normal listening position lined up in the best spot possible. If this would have you resting your chin on the console or desktop, you could tilt the monitor back slightly. This keeps your head in the sweet spot whether you're leaning forward adjusting level or EQ, or leaning back and listening to the mix. Don't go crazy trying to get this exact to three decimal places, within an inch or two gets you into the game.  When a passive system's single amplifier must reproduce the whole audio spectrum, low frequencies rapidly "use up" the amp's headroom. As higher frequencies "ride along" on lower frequency waveforms, they can be chopped off or distorted even though the high frequencies themselves would not be clipping. Separating highs from lows via an active electronic crossover lets a bi-amped system use two different amplifiers. Each is free to drive just one transducer to its safe maximum limit without intermodulation distortion or other interaction between the two drivers.

When a passive system's single amplifier must reproduce the whole audio spectrum, low frequencies rapidly "use up" the amp's headroom. As higher frequencies "ride along" on lower frequency waveforms, they can be chopped off or distorted even though the high frequencies themselves would not be clipping. Separating highs from lows via an active electronic crossover lets a bi-amped system use two different amplifiers. Each is free to drive just one transducer to its safe maximum limit without intermodulation distortion or other interaction between the two drivers. Q: Is there really a difference between monitors that are just a few hundred dollars and the ones that I see for a few thousand?

Q: Is there really a difference between monitors that are just a few hundred dollars and the ones that I see for a few thousand?